Darn, I wish that when we try to delete projects, the forums get searched for any links to them, then it could give you the option of cancelling or continue deletion…

Hi Ric,

Well thats unfortunate. A side tip, I use a secondary account to store valuable projects. Maybe you can try doing that for future projects. Is there a way we can replicate those runs and try to eliminate the various inconsistencies to isolate the variable that is the relax factors? I can help with the 2D mesh generation which will give shorter run times on top of removing the additional trouble with 3D mesh generation and variables in 3D turbulent flow.

I made a version 2.05a for you that shows both pressure and viscous moments for all 3 axes…

Here is a link: yPlusHistogram_v205a.zip

thank you so much!! It appears to be invalid for some reason. I cant open anything

What are you trying to open? I had to allow the new exe to execute on my windows defender and then it seems to work…

Dale,

Sorry i had replied but i think it didnt work somehow.

Anyways, i have to remove the firewall allownace for this EXE?

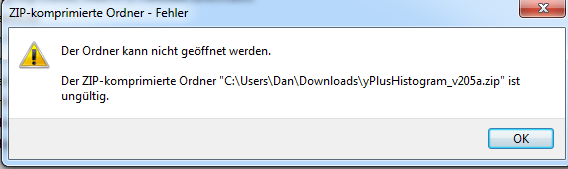

When i download i get this

Then when i resume the download and try to open, i get this

( the file cannot be opened)

The ZIP “…” is invalid)

When i try to extract the ZIP contents it says the folder is empty.

Is this more of an update file? or do i need to delete the old version so this one will work?

Dan

It is not an update, just an updated .exe file. You can have as many copies of my exe files as you wish on your computer, each one will run independently of the others…

I use free 7zip program to unzip zip files, I just double click the zip file and then drag the exe file in it to anywhere I want on my computer. Then the exe file can be executed with a double click on it (and making sure your virus software lets you execute it by allowing it as an exception when it warns you that it could have code to harm your computer in it.

I have downloaded the zip file twice now and it unzips fine for me… I suspect that you have a virus program stopping you from accessing the exe file in the zip file…

The version of the program is only visible after you start the exe file where the version number will apperar in the title bar of the programs window. You can change the name of the exe to reflect the version if you wish (yPlusHistogram_v205a.exe) if you wish after you extract it.

Hey everyone,

im having more trouble with the convergence of my 7 meter simulations. I have run 4 different wing setup simulaitons and luckily i decided to check on them to find that they are all divergent. I thoroughly checked the sim settings as well as the mesh and didnt find any irregularities. i then used one of the runs (Radius_7_Run_1.3) for testing.

I have so far had divergence on every run so far. Sim run 1 had a mistake and is not considered.

here is the link for sim run 2

I have been slightly modifiying the relaxation factors and non-ortho corrector loops to see if i can achieve convergence but nothing has worked so far. Hopefully someone can help.

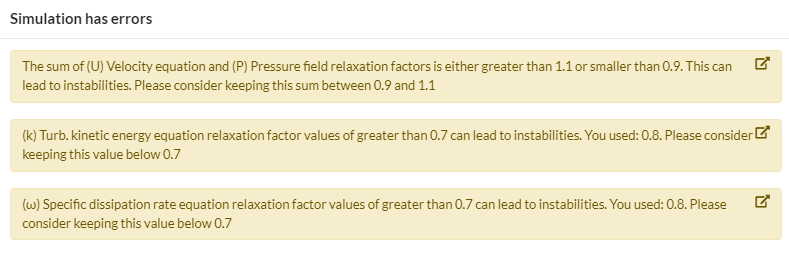

Also, as soon as i try to change the relaxation factors from default settings this “error” shows up. is this more of a warning?

Radius_7_Run_1.3

Sim Run 2

P - 0.3

U - 0.7

K - 0.7

W -0.7

Non-Ortho Loops - 1

Result - Divergence

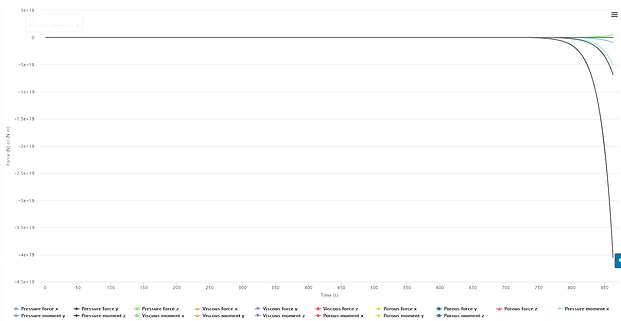

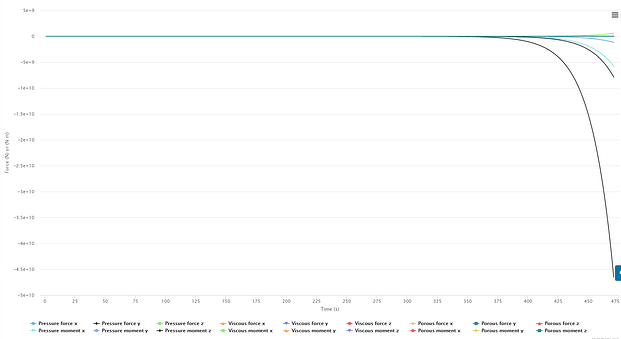

Force Plot

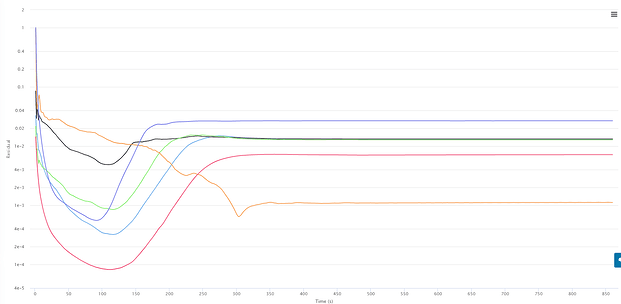

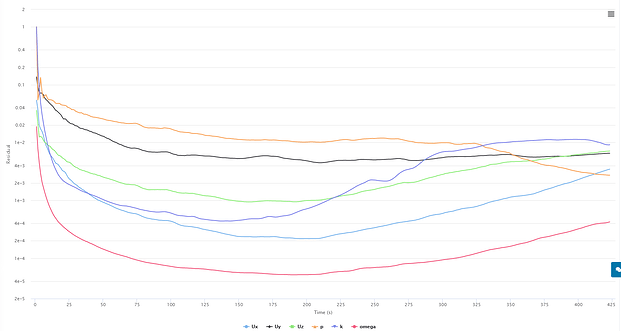

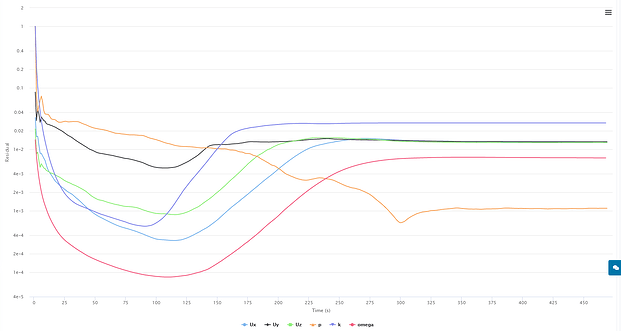

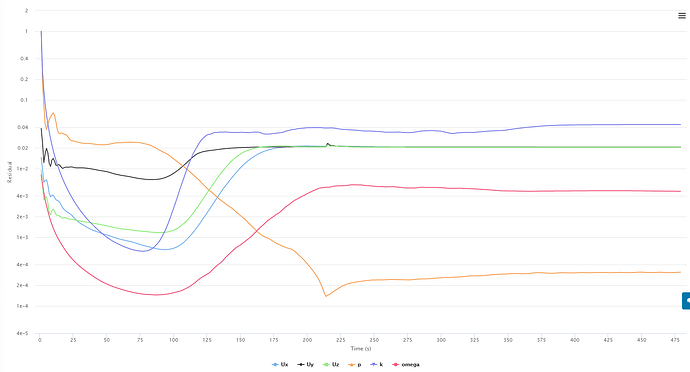

Convergence Plot

Sim Run 3

P - 0.4

U - 0.8

K - 0.8

W -0.8

Non-Ortho Loops - 2

Result - divergence

Force Plot

Convergence Plot

Sim Run 4

P - 0.3

U - 0.7

K - 0.7

W -0.7

Non-Ortho Loops - 2

Result - divergence

Force Plot

Convergence Plot

For the next run i am trying tighter relaxation factors, because after reading this A post from this thread: Most Popular Errors of Simulation - #3 by jprobst Johannes is saying that LOWERING the values of p,u,k, and w will help with convergence. I had thought that values closer to 1 help with convergence through the description from OPENfoam

Could my simulation be diverging this time because it is too loose? As in, the default values are already accepting too much of the new iteration results making each successive calculation have larger and larger residuals?

Sim Run 5

P - 0.2

U - 0.5

K - 0.5

W -0.5

Non-Ortho Loops - 2

Result - diverged

Force Plot

Convergence plot

Dan

Hi Dan

Stronger under-relaxation slows down the convergence by limiting the update that happens between iterations. Let me show you an extremely simplified thought experiment with which I try to demonstrate the point.

Imagine the velocity in a channel flow with an initial condition of 0 m/s and a BC which pushes the flow in positive x direction with 1 m/s. In the process of convergence the solution must ramp up from 0 m/s to 1 m/s. Due to the nonlinearity of the Navier-Stokes-Equations, the solution must be found iteratively, which makes the problem harder. For example there is the risk that the solution overshoots.

In the first iterations, the solver usually sees a huge discrepancy between the initial conditions and boundary conditions: the flow ought to be somewhere around 1 m/s but currently it is at 0 m/s. Therefore, the physical thing to do is to accelerate the flow massively. This is how the solution can overshoot and we may end up with 100 m/s in the next iteration. Especially if other factors are at play (bad mesh, turbulence model with bad estimation for BCs).

Under-relaxation slows down convergence on purpose. Instead of allowing the solver to go all the way, we only go a certain fraction of that. An under-relaxation factor of 0.3 means that we only apply 30% of the change. This slows down convergence and may improve stability. An under-relaxation factor of 1 would be maximum aggressive in terms of convergence speed. A value of 0 doesn’t make sense because it effectively disables the iterative solution process altogether.

Therefore, lower relaxation factors improve stability. It trades stability for speed (very common tradeoff in CFD).

There is a bit more to say about relaxation factors, for example the relaxation for the turbulent quantities should be equal, the factor for velocity should be higher than for pressure, etc. Good starting values are 0.7 and 0.3. You can try 0.3 and 0.1 for example.

Regarding that warning message - just ignore it. I think the message shouldn’t be shown in the first place. There has been some discussion on our side. I believe the message doesn’t make much sense. It’s a yellow message so you can override it. (Errors are shown in red and cannot be ignored).

Johannes

Johannes,

Thanks so much for the detailed explination of relaxation factors! My reseach had led me to believe that they function in a different way. I thought Relaxation factors had mainly to do with the scaling of residuals and not the whole term itself.

As in, moving from 0m/s to 1m/s the simulation would ramp up to maybe 1.3 m/s and then based on the known target of 1m/s, converge to this level. I guess were saying the same thing, i had just visualized it that the solver could make a more accurate initial guess, then based on the relaxation factor settings the solution would oscilate around 1m/s with:

a deviance of plus/minus 0.1 m/s for faster convergence, less need for accuracy - RF’s closer to 1

or

a deviance of plus/minus 0.02 m/s with longer sim times , more need for accuracy- RF’s closer to 0

I think the warning message should still be shown, but not say “simulation has errors” and also not say “can lead to instabiities”. It would be better to give a description of the effects on the simulation when these values are changed, and depending on what the user needs - Speed or accuracy - recommend certain settings.

I am also thinking that after 5 divergent simulations, there must be a setting or decimal place i missed. i guess i have to search even better for the error.

Dan

Johannes,

I am unaware that these recommendations existed. Where can we read more about these particular recommendations etc.?

Dale,

I double-checked this with our documentation maintainer and he said we don’t have anything about relaxation factors yet. The entire numerics section is high on the backlog, though. So it will come at some point in the near future, I don’t have an ETA though.

Johannes

Dale,

Im not sure if this is helpful but i have found a small section in The finite volume method in computational fluid dynamics book that might shed some light on this. Section 8.9 page 256. Im sure theres more information out there on recommended values.

Dan

Thanks Dan,

I believe that Section 8.9 only restates, in more mathematical terms, what the quote from LuckyTran states in my starting post of this thread…

Section 8.9 says “it should be noted that at convergence ϕC and ϕC* become equal independent of the value of relaxation factor used”

The data and runs that were provided in the beginning of this topic do not seem to confirm those statements.

I just re-read this topic and your above statement jumped out at me. I am surprised I did not respond at the time.

Any way, I have come to believe that changing quality factors does not make for differences in the results or convergence. They just make you feel better since they are the benckmark for illegal cell determination. I could be wrong about this and I would really like to be set straight on this if I am wrong ![]()

I personally do not fully understand what is really going on here. However my guess is that the quality metrics only tell the algorithm during the meshing process, which cells to and go back and fix. So after the first round of snapping/ castillatin/ layer addition, ANY cell above ANY of the set quality metrics refer to the RELAXED snapping/castillation/layer addition settings specified in the mesh settings tree.

For example, the max non-ortho setting at 70 deg has 2000 illegal cells, this tells the algorithm to try and re-snap or move cells these 2000 cells based on the looser snapping rules set in the settings. It will most likely not be able to move all 2000 to a non-illegal position resulting in 1890 illegal cells at the end of meshing. However, 110 cells were moved to a position where non-orthogonality is below 70 deg … a good thing.

Now max non- ortho is set tot 75 deg, resulting in 154 illegal cells. Same process as above ensues and the end mesh result is 74 illegal cells. So now we have 80 cells in a non-illegal position but this non-illegal position is under 75 deg not under 70 deg which i would assume is not the better solution.

HAVING SAID THAT… now the question becomes, does the max-ortho setting of 70 deg instead of 75 deg move more illegal cells that have a very HIGH degree of non-ortho (85-90). As in the really bad cells that cause mesh and simulation failures.

It would be interesting to try and do meshes and simulations with variying amounts of Max non-ortho fromm 70 to 89 deg, ignoring the illegal cell count output, and seeing where the failure point is.

My opinion is still that a geometry will inherently produce non-orthogonal cells, and only changing the geometry, or its position in the meshing domain, will drastically effect the non-ortho count.

Dan

I was hoping my unscientific belief was wrong

Sounds like your understanding at this point is much better than mine.

What about the cells that end up being between 70 and 75 degrees when you set to 75 and then mesh. The algo thinks the 70 to 75 ones are OK so it likely does not move them because illegal cells are only above 75. This is why I think increasing to 75 may be a losing battle.

If the algorithm could have gotten rid of the few nasty ones that really matter 85-90, it likely would have done so even with it is set to 70… Just rambling… Maybe it can never take an 85 cell reduced to <70 no matter what value we put in, or maybe to reduce the 85 cell it needs to put many 75 cells around it. I just can’t figure out why it would do its meshing any more diligently between different settings, but I guess it somehow does…

As a rule, bad cells (that affect results and convergence) are >70 so when we set to 75 we lose to ability to have a count of the ones still between 70 and 75.

Yes, it could take a lot of core hours to investigate this and I don’t have many right now…

My understanding is just a guess, nothing more haha.

Yes i agree that changing from 70 to 75 wouldnt help that much as the cells in between these values are left out. You could argue to go the other way and use 65 as the max value, adding more cells to the relaxed settings but this does not really solve the problem.

I am not even close to the experience level in meshing where i can make a good observation on this but from what i have done so far, changing the geometry has done the most.

Second place would be increasing mesh fineness

Third would be layer addition changes

pretty much anything that will make the algorithm snap cells to another location either on the surface or in space. This is why the mesh quality feature was a game changer for me. I used this extensively when checking meshes for really bad cells. then i could go back and add a camfer or fillet and the mesh snaps to a new location, solving the problem.

I do not think you layered the locations where you were having the orthogonal issues, were they close to the geometry surface?

Imagine the layering deflation’s that would happen around them if they were…

Yes i layered just about the whole car. A lot of my problems came from the wheels and i did see layer deflation there. I did a lot of testing, both with geometry changes and messing with the mesh settings to try and reduce the Non-ortho cells there. While i did get rid of many bad cells, they were never gone. I also had a fairly constant mesh failure problem with three adjacent cells sharing the same face. but thats another story …