Nope, it is pretty unique…

The application may be unique but the simulation steps, mesh setup, and results will vary in the same way, this is why validation is important. We might not be simulating the exact same thing but, validation and verification will confirm the mesh is fine enough and the assumptions are fair (there may be other reasons but these are the two normal reasons for poor CFD results). In terms of building a plane without ever checking your setup, that might be bad (unless it works, then your lucky ![]() ).

).

I guess what I am saying, is its all very well and good simulating a unique craft, but maybe the first step should be simulating a ‘normal’ craft with an abundance of data to ensure we are not making poor assumptions.

But in terms of this mesh independence, I am not sure I understand the relevance of % of largest volume? I think it just over complicates, do the graphs look similar without it? and also, do they reach the same level of convergence?

Best,

Darren

I am under the impression that we can expect the mesh with the largest volume to be the most representative of ‘correct’ results. If that is the case then all others can be referenced to results of the mesh with the highest # of volumes. The shape of the graphs will be the same but with my referencing, we can see at a glance what percentage difference each mesh with fewer volumes is different from the highest volume mesh. It just saves you from trying to do that percentage range in your head.

Wow, so I look to NASA and find ‘Tutorial on CFD Verification and Validation’. Where it says this about Verification and this about Validation.

It is going to take me a while to absorb all that. Darn, I just want to have some Force and Moment results that can be expected to be within a range of say +/- x % of what I will see if I actually build this plane.

I never build things without designing them first, I see CFD as part of the design process. Checking the setup of various design stages is done as a matter of course. I just want to know how much I can rely on the CFD results, this will determine things like how many different scale models to build and test before the manned version etc. ![]()

I am using ‘normal’ airfoils on basically a canard design that has a unique fuselage, it should not be much of a stretch to expect CFD to give me some valid results without having to build a wind tunnel model to see how CFD did. If I have to do that then maybe I should just do wind tunnel models. What I really need to determine is what mesh should I use and to come up with a guess as to how far the real life plane ‘Force and Moments’ can be expected to vary from the CFD results.

Any ideas how I can achieve my goals from where things stand now?

![]() yes haha, but that wasn’t the point I was making! I have no doubt at all that CFD is capable! The question is not can CFD do it, but more ‘have I made a good mesh?’ ‘The boundary conditions I have assumed, are they correct?’ ‘Is the bounding box I have left sufficient?’ ‘is the wall modelling I have selected correctly?’ ‘does the turbulence model I am using provide accurate results in my application?’

yes haha, but that wasn’t the point I was making! I have no doubt at all that CFD is capable! The question is not can CFD do it, but more ‘have I made a good mesh?’ ‘The boundary conditions I have assumed, are they correct?’ ‘Is the bounding box I have left sufficient?’ ‘is the wall modelling I have selected correctly?’ ‘does the turbulence model I am using provide accurate results in my application?’

I am sure we could think of more, and moreover, there are many of those questions that you have been able to find out, mesh independence, comparing different modelling techniques etc. Which is good, however, some questions are best answered using a comparison of results. You see you could do this to a case where you already know data about, you don’t need a wind tunnel, moreover, you don’t need to validate CFD, you do however need to verify your setup, your understanding and your assumptions (after all modelling is about making correct assumptions). You would hate to find out your results were off because you didn’t explore turbulence models or how big the bounding box is, but most importantly, how much time do you put into investigating all this? maybe you got it right first time? then additional work is a waste of time! You will only know if all is good if you compare to something, then apply what you know works to your own application.

Does that now make more sense?

Best,

Darren

Yes, as far as setup for my sim, I looked at a lots of the tutorials and my user profile shows I have been reading in this environment for nearly 3 days straight out of the last 4 weeks. I have not confirmed that my initial turbulence values are correct for my case yet, so far I just used defaults, it is on my list to review. I thought that could wait until after I select a mesh to evaluate in detail at various aoa’s and airspeeds, even adding some up/down elevator to geometry to start looking at stability cases. Also, hopefully, if you saw anything really weird in your brief look at the project I am sure you would have mentioned it  .

.

I am still stuck on the Independence Study, I will not carry on until it starts making some sense (or some sense gets knocked into me  ) .

) .

Thanks,

Dale

Well, after many days and countless hours I have gotten Cl and Cm to behave.

I did it by changing the refinement procedure I used to create the base mesh which only gets its x,y,z Background Mesh Box cells changed to create all the other meshes in the study.

At some point when I have some time I will post back here what my refinement procedure actually was that allowed me to obtain a minimum value of % inflated for ALL meshes in the study of 98.6%. (EDIT; Actually it needed to be described anyway to carry on, here it is, a few posts ahead of here)

All study meshes have square cells (exactly) on all faces of the Background Mesh Box.

Again, I am using vertical scales on all plots that represent the plots data parameter as a percentage with respect to the value of that same parameter, in the mesh with the highest # of volumes (this highest volume mesh is expected to give the most accurate results). This eases the mental burden of just looking at numbers and trying to figure which is better and by what percentage.

Here is the study:

Unfortunately, though Cl and Cm are behaving, pressure drag is not.

EDIT; Since I did this study I have come to realize that I should not look at Cm as a variable that should be evaluated as a percentage of the previous iteration for MIS study use. The reason is that we can re-map the Cm to any value, including 0, simply by moving the Reference Center if Rotation point that determines the Cm moment arm for any simulation run. So, please ignore the Cm plots…

Dale

Hi @DaleKramer,

Considering all your data and the work you’ve put in, it probably comes to the numerical algorithms for the simulation. A good post from the CFD forums highlights this problem nicely which I shall quote here. The post was asking about why the velocity results for that particular simulation were not converging despite finer and finer meshes.

The velocity profile tends to be steeper on finer grids because there’s less numerical dissipation/damping. Convergence doesn’t mean the solution is accurate.

All else being equal (same problem, same initial guess, all settings equal), increasing the mesh resolution (finer mesh) will take more iterations to converge to the same solution.

It’s a result of the implicit discretization schemes which results in a sparse linear system. That is, changes in cell properties only affect their immediate neighbors. Hence, it takes many many iterations for adjustments of the solution to slowly propagate cell by cell throughout the entire domain to reduce the global error.

This behavior can be overcome/accelerated by using a multigrid algorithm to improve the speed at which global errors are reduced, but when you go to a finer grid (you need to make the multigrid method more aggressive). If you don’t change these settings in the multigrid solver, your finer grid would still take more iterations (because the coarse grid has more aggressive settings relative to the finer grid).

So basically at this point the discretization schemes are the likely the cause of continued divergence in results.

The solution would be to try other discretization schemes, maybe higher order ones if the mesh quality is sufficient.

Do see if the the sim can run (the 13 mil mesh) with other schemes.

Cheers.

Regards,

Barry

Barry,

Very relevant research, thanks ![]()

I was hoping that there was a specific reason that pressure drag was not converging and it was going to be an easy fix, oh well …

Hopefully I can find a discretization scheme that will at least let me continue to hope that my finer meshes can be EXPECTED to give me the most accurate results ![]()

I will start playing with Hierarchial, and Simple decomposition schemes and see if I can get more confused again ![]()

Thanks for re-directing me with a gentle nudge … ![]()

Quick question, will the mesh quality parameters for Tet meshing work on a Hex Para for determining my mesh quality. I had a look at this a few days ago and couldn’t quite determine that?

Dale

Hi @DaleKramer,

I don’t think its possible to extrapolate it like that if i understand correctly what you are trying to do. The simple difference between the types of cells should render this comparison null in almost every way.

Mesh quality can be determined at the end of meshing log where it tells you some details about the mesh quality along with whether the mesh is OK or not. From what I’ve experienced, you’ll find out if mesh quality is sufficient when you start using higher order schemes. If it diverges or gives an error, it probably indicates inadequate mesh quality.

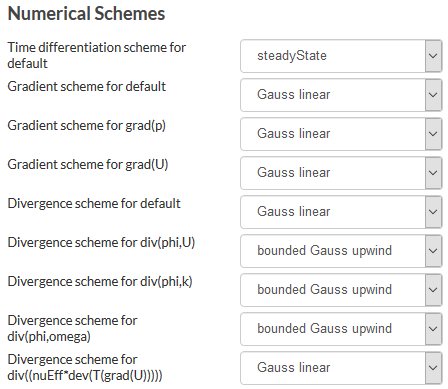

These are decomposition algorithms. They deal with how the work is allocated to the cores of the CPU. What you need to play around with is under Numerics and in particular, the section called Numerical Schemes.

Cheers.

Regards,

Barry

I sorta knew that because I played with them on my Transport Error issue …

That was just my way of asking if I was about to embark on the next journey down the correct road ![]() , my newbie status shows again

, my newbie status shows again ![]()

Thanks

Before I start down that road, I am now getting concerned about my 13mil mesh quality. From all my reading I am under the impression that that little ‘Mesh Operation Event Log’ message that says ‘Mesh quality check failed. The mesh is not OK.’ can usually be ignored. Perhaps in my case, I can not ignore it.

However, I have never been able to determine what that little error actually means and how to get rid of it, any ideas? (I think my Meshing Log ending does not help me, but I could be wrong)

Here is the message and the end of the Meshing Log:

EDIT; @Get_Barried, sorry to call you back but I can not proceed until I sort out this failure in quality error ![]()

Thanks,

Dale

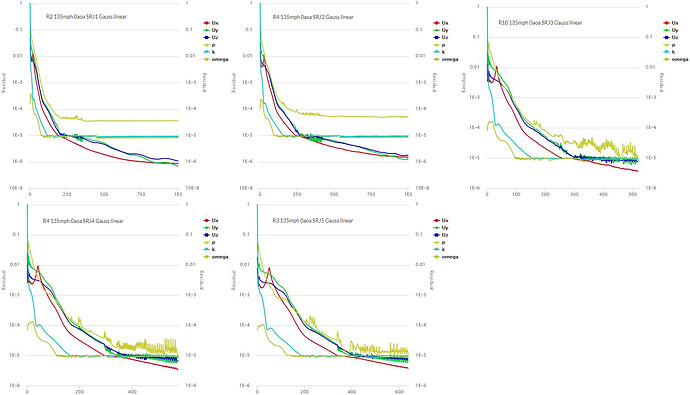

Well I decided to try all the different Gradient Schemes on simulations of mesh SRF3 13mil.

I had no expectations about what I would see in the results and I will present them here so you can suggest my next step.

Here is a chart of 6 simulations I have run and are sorted in the same ‘Scheme’ order as the dropdown selection list for different schemes (I started with Test#3 by only changing the U gradient scheme because of the unusual Ux spike that I have seen on all simulations of the mesh , but I then decided just to change the gradient schemes for the Default, p and U for the remainder of the 5 test sims):

And I think it would help if I presented the Convergence Plots for all 6 tests.

Here is Test #1 (Gauss linear):

Here is Test #2 (cellLimited Gauss linear LC=1);

Here is Test #3 (U only cellLimited leastSquares LC=1):

Here is Test #4 (cellLimited leastSquares LC=1):

Here is Test #5 (fourth):

Here is Test #6 (leastSquares):

I guess what I was hoping to see was a simulation that removed the peak in the red Ux curve and the dip in the cyan k curve that has always appeared around 50s on ALL sims of this mesh.

Should I pick say the Test #4 scheme and run it on all 5 of the SRF mesh series of different volumes?

Or, can you see a better next step?

Perhaps I should go back and fix the quality error that I am waiting for direction on here… ![]()

Thanks

Dale

Hi @DaleKramer,

Apologies for the slow replies. I live in a different time zone (GMT +8) and when i was replying you previously it was 5am on my end.

Getting the mesh quality to meet the quality criteria is going to be a little tough. Namely the parameters dictating quality of the mesh is not that easy to adjust. I’m unable to guide you at the moment on this as I’ve usually ignore this aspect due to my results giving me adequate accuracy despite the quality not being ok. I’ve only ever once had a good mesh quality check for a complex geometry and that took some time to get the mesh right by cleaning up the CAD significantly and applying simple meshing refinements to keep things simple.

I would like to help by reading through the settings of SnappyHexMesh and how they dictate the behavior of the mesher but it will take a significant amount time which I do not have at the moment. Thus the natural course of action would be for you to read through and try to understand how they work, what the quality checker looks for and how to adjust the specifics of the mesh to meet quality requirements. The other option would be some one who is well versed in this to give recommendations.

Getting the mesh quality to be adequate will help in ensuring higher order schemes like the leastsquares and fourth can be run for your mesh. Further numerical dampening may be needed even if mesh quality is sufficient due to the nature of higher order schemes to be less stable. That I may be able to advise you, but testing it via trial and error (with some prior knowledge of how the schemes work) would be the way I would to get the higher order schemes to be stable.

Sorry Dale,I understand that you want this project completed ASAP, but I will need some time to read through the meshing and numerical schemes. We can converse more on the particular settings to get the quality to be there but it will be relatively long and iterative process for sure unless someone can recommend the right settings.

Cheers.

Regards,

Barry

NEVER any reason to apologize for response time with me. I just carry on, but I try to stay organised by creating new posts when I carry on rather than post edits (sometimes I edit ![]() ). I am sorry if that seems like I am pushing, I am not , I am just trying to stay organised.

). I am sorry if that seems like I am pushing, I am not , I am just trying to stay organised.

You have provided me with much help and guidance which has provided much advancement in the ‘education of Dale Kramer’, thank-you.

I have lost all track of time in the last month and it must seem that I am on a madmans schedule, but in reality I have no schedule. I have just been wanting to use FEM for so many years and it looks like now is the time. ![]() Such has been my life, watchout when I commit to something.

Such has been my life, watchout when I commit to something.

My single solid geometry is as clean as I can make it, I am hoping that is not the issue.

My geometry is refined as simply as I can think of with a Background Mesh Box generously sized (I think anyways ![]() ) . The method was, one region refinement encompassing the whole plane plus a few feet, one surface refinement on all surfaces to a single level (where Min level=Max level) close to the last layer thickness and then a 3 layer layering to a yPlus of 160.

) . The method was, one region refinement encompassing the whole plane plus a few feet, one surface refinement on all surfaces to a single level (where Min level=Max level) close to the last layer thickness and then a 3 layer layering to a yPlus of 160.

On SRF3, my Level 0 cell dimension was about 14 in. x 14 in., which makes Level 7 at 0.111 in. square. My 3 layers had a final layer thickness for yPlus =160 of 0.107 in. (using reference length as the length of the whole aircraft).

Here you can ‘see’ that my region box was Level 3 refinement and the ‘All surfaces’ refinement was to Level 7 (I think the cells added during layering also show up in level 7 quantities below for some reason):

As far as where to go next…

I will research what the SnappyHexMesh quality checker looks for and try to get ‘fourth’ and 'leastSquares’to converge and then see how those options handle my mesh in a full study spread. In addition, I will likely run a study spread on the already converging cellLimited leastSquares scheme (Test #4).

Now back to bed for me ![]()

Dale

Well, it has been a few days and a lot of work but I have made some progress.

First, it was very difficult, as Barry had suggested, to make any sense of what mesh anomalies may cause that annoying little ‘Mesh Operation Event Log’ message that says ‘Mesh quality check failed. The mesh is not OK.’

I was only able to make 2 of the 5 meshes in my new SRJ study series meshes pass that, not very well documented, Quality check (SRJ1 and SRJ3).

Notwithstanding the fact that only two of my five SRJ meshes are ‘OK’ per the quality check, I believe that my new SRJ series of study meshes must be better quality than the SRF series of the last study that I have shown. The reason I say this is because I was able to get 4 converging ‘leastSquares’ gradient scheme simulations and 1 converging ‘fourth’ gradient scheme simulation from them. I was not able to get any of the previous ‘Not OK’ SRF meshes to converge with ‘leastSquares’ or ‘fourth’ gradient schemes.

In the below presented SRJ study, I am still not happy that Total Drag continues its downward trend. In fact, Total Drag also goes down about 5% for each of the last two increases in mesh number of volumes for the ‘leastSquares’ gradient scheme.

Barry, these results indicate to me that discretization schemes are NOT the likely the cause of continued divergence in the results, specifically Pressure Drag. I say that because ‘leastSquares’ Total Drag follows ‘Gauss Linear’ Total Drag in the same downward trend. Any other ideas?

Here is the SRJ Mesh Independence Study:

Here is how I was able to increase the quality of the SRF meshes to reach that of the SRJ meshes:

-

Set ‘Min tetrahedron-quality for cells’ to 6.1e-9. The default for this parameter is -1e+30, which actually turns off this quality assurance parameter. I am not sure why the default is off, other than it is ‘easier’ to layer a mesh with it ‘nearly’ off (see here). The problem is that with this parameter OFF, I think that leaves cells of low quality in the mesh, which in turn may generate the ‘Not OK’ quality failure notice and reduce the accuracy of your results. I think it is worth the time to refine the mesh better before you layer it rather than band-aid a layering failure by turning off this quality assurance parameter.

As far as why I chose the value I did, well, I tried values on both sides of this but I felt this was the highest value that reliably left my SRJ3 mesh quality notice as ‘OK’. I think this value may need to be tweaked further, probably on an individual mesh basis. -

Although #1 could likely have increased the quality of my meshes enough by itself so that higher order gradient schemes would be more likely to converge, I found a units discrepancy that I chose to fix for the SRJ mesh series.

The default value for the parameter ‘Min cell volume [in³]’ is 1e-13 while the same parameter in a project with SI units ‘Min cell volume [m³]’ is also 1e-13. I made the assumption that the correct default was the SI units value.

Therefore I converted 1e-13 m^3 to 6.1e-9 in^3 and I used this as my value for ‘Min cell volume [in³]’

I think this is a significant discrepancy and I hope someone looks into it…

Also, as an aside, I have discovered a Snappy meshing characteristic that disturbs me. If I make a Copy of any mesh, delete its result and then re-run it with the exact same set of parameter values, I end up with a mesh with a different number of cell volumes. I believe this can have many serious implcations and I have not really thought it through yet but does this concern anyone else???

Dale

Hi @DaleKramer,

As always, great work done going through the nuances of getting the mesh quality check passed and posting the approach on how to achieve it. Its a great contribution to resolving future problems with the mesh for sure.

Odd. In that case maybe the schemes are not the issue. Tough to think of what could be the possible issue again. Are you able to share the convergence plots (if they are not the same as above) and the results control for the meshes where the quality check is passed and you have managed to successfully simulate them both for the first and higher order schemes? I need a little bit more information at this point.

Ah yes, a previous user has experienced this issue as well. While I do not have a definitive answer to this problem of why it happens, I think I gave a hypothesis that due to SnappyHexMesh being iterative, the more complex the geometry the more prone it is to diverges in the final mesh. I may be completely wrong and I probably will look into it if it starts really affecting my results. The implications of this are still not very well understood by me and I quick search seems to not yield any valuable answers to this issue. I would assume there would be result differences, but at the level I’ve mostly worked at, the difference is not significant enough to warrant a need to resolve such an issue.

Looking forward to your reply.

Cheers.

Regards,

Barry

Thanks for the encouragement!!

Here are Gauss limited convergence plots:

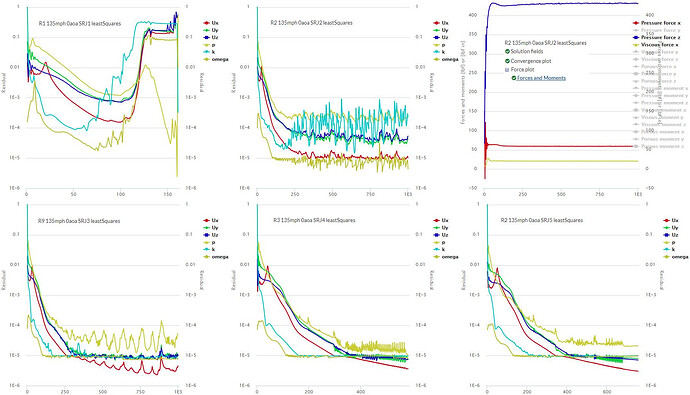

Here are the leastSquares convergence plots:

Here are the fourth convergence plots:

My brain continues to think that since we are having Pressure Drag anomalies in the Mesh Independence Study, that the red peak in the Ux (the direction of Pressure Drag) is related to the anomalies.

Under that premise, perhaps it is a turbulence issue because a slight cyan dip (k value) precedes the red peak.

The cyan dip and red peak are present in ALL convergence plots.

Any ideas on how to get rid of the cyan dip and red peak?

Otherwise, do these plots help you see a road to continue on to track down these Pressure Drag anomalies?

I do think that I will start considering all meshes as unique items since I can never exactly reproduce them.

I can not think of an iterative reason that they would be different. I assume each time they are run with the same parameter values, that they are using the same mathematical precision and formulas so that each calculation should result in the same decision based on the calculations result.

Anyway, since even a few bad cells could cause a ‘hole in the dyke’ that good results could flow through, I am concerned.

I think that we should start a new ongoing topic for this, what do you think?

Dale

Only the SRJ3 mesh, that passed the Quality Test as ‘OK’, converged with more than the Gauss limited scheme.

The ‘OK’ SRJ3 converged further with only the leastSquares scheme (diverged with ‘fourth’ scheme).

Is this what you mean by its ‘results control’ (of the SRJ3 mesh):

Thanks,

Dale

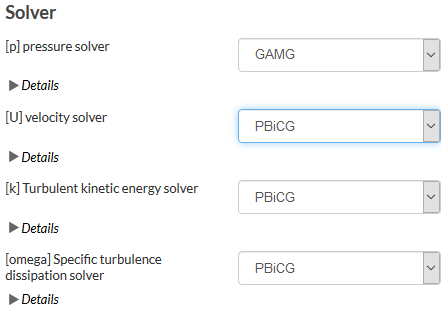

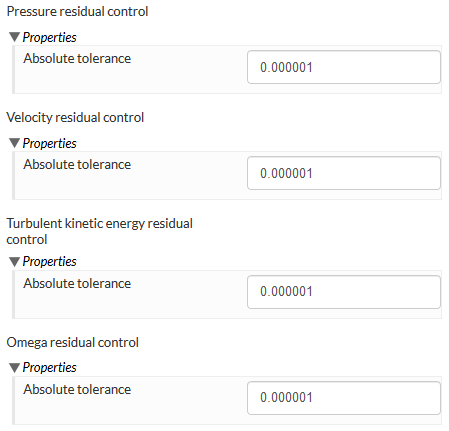

Hi @DaleKramer,

Thanks for the posts on the convergence plots. I will only consider those that have either converged or reached the simulation end time. Plots like the second figure top left (R1 1 35mph 0aoa SRJ1 leastsquares) and almost all of the fourth plots except the bottom left R5 134 mph 0aoa SRJ4 fourth) are diverged and the results obtained from those cannot be used. Further adjustment may be needed in the meshes or numerical parameters in order to try to get these plots to converge. We’ll work on them probably later as higher order schemes don’t seem to yield the result behavior we expect.

If you look at the plots that do converge, we see acceptable margins for convergence even for the residuals of Ux. The residual that does not converge as well tend to be the pressure (p, yellow) and this is usually due to the mesh and how the velocity interacts with the mesh itself from what I’ve experienced. However, some simulations do give good convergence for that value and the ones that don’ t converge as well are also of acceptable convergence.

Side note, what we deem by convergence is typically having the residuals at 1E-4 and below. Ideally everything would be 1E-6 or below but 1E-4 is deemed loosely converged. We can probably set a reasonable limit of 1E-5 as the acceptable convergence margin that we’re looking for.

So, here is what I think we can try. Definitely we should try to get the results to fully converge first then get them below our arbitrary set limit of 1E-5. I suggest picking two of your best meshes (probably the SRJ4 and SJR3 or something, you can determine this), performing a first order simulation with the default solver schemes and adjusting the residual controls for pressure and all corresponding residual controls to 1E-6. I’ve attached screenshots of the default parameters you should adjust to below. Once this is done, increased the simulation end time to 2000s and post the convergence plots and result control plots here for those two meshes.

Results control is under the simulation tab and is a way to check for additional convergence while getting a good estimate on the actual values that the simulation is obtaining. You’ve already posted a result control in the second set of figures for Leastsquares, its at the top right corner. Do ensure you apply this result monitoring for both the meshes that we’re going to simulate again so we can monitor them.

After we get steady-state convergence, aka when the residuals or the result controls have reached a steady-state, we can work on bringing any less than preferred residuals down to our set limit. We will probably do this by adjusting the relaxation factors, but that will entail a longer simulation end time so we will deal with that later. Provided that of course whatever we just did to get-steady state results do not simply resolve our continued divergence in results.

The possibility of small issues in meshes causing large deviations in results is very well possible. Whether it is worth it for the user to spend significant amount of time refining their meshes or troubleshooting in order to negate this discrepancy may be debatable based on why exactly this occurs. So yes I would say we should start a topic on this. However, I would like to resolve this issue of yours first before we move on. Then we can spend our efforts tacking the other topic. You are welcome however to start on it if you do have ample free time.

Looking forward to the results. Cheers.

Regards,

Barry

EDIT: On a side note of peculiar issues with the mesher that has not been resolved. I’ve encountered several issues like in this post where the mesh seems to behave very strangely. Maybe we can start a mega-thread (with your observation about each mesh not being the same) and post all the peculiar problems and try to fix or understand them. It would then be very beneficial for everyone once we figure it out and post the solution.

As I realize that this topic will likely be a great help to other new users, I appreciate that you are expanding on things that I now take for granted, thanks ![]()

All of the runs had residuals for convergence set to the default 1E-5.

I’m on it ![]()

I have already confirmed that the issue was not the fact that I had only used Default Initial Conditions for k and Omega. I put realistic values in using a reference length of my fuselage length and got very little difference in force results.

Also, I had already started relaxing trying to converge the SRJ3 mesh with ‘fourth’ scheme, no luck, here is convergence plot for U,k,Omega relaxed to 0.3 (the cyan dip and red peak are gone ![]() ) :

) :

Sorry, I was confused when you asked me to provide the ‘results control’ of a mesh rather that the ‘results control’ of a simulation run which I was familiar with.

Here is the sim run results for run ‘R9 135mph 0aoa SRJ3 leastSquares’ (stable to less than +/- 0.5% after 300s):

Thanks, I had not come across this topic. I am am already playing with a copy of his project to see if my layering methods will help. He never did get a 15 layer mesh down to 0.0002 m first layer.

I agree and will add that to my ‘ToDo’ list unless you start one first ![]()

But the problem with mega threads is that it gets very had to follow just the ‘each mesh not the same issue’ if a lot of other issues are being discussed too…

Dale